The AI Software Pipeline

Filed under: #tech #ai

As I explained in my previous post, the software around a language model is really what makes it all work to become an AI “system”. To dig a little deeper into it, let’s look at how software leveraging AI is typically made using a pipeline. Note that some or most parts of such a pipeline are part of existing APIs or frameworks you can use, and probably should, but it’s important to have an idea of how these things are built.

Having an LLM API such as the OpenAI APIs directly interacting with your users is likely not enough for your needs, and probably just not a good idea in general. Not enough from a feature perspective, and not good enough from a safety and security perspective. For this we’ll build an AI “pipeline”, taking the user input through a series of steps before sending one or more queries to an LLM API, and taking the output of the LLM again through a series of steps before we return anything back to the user.

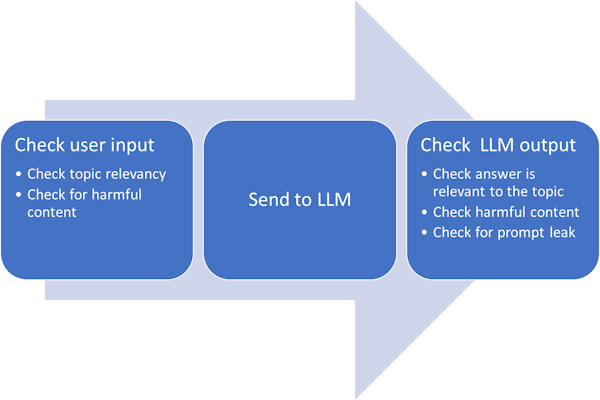

Both OpenAI and Azure OpenAI do some very basic filtering already for the most egregious toxic and dangerous content. Azure OpenAI has some extra configuration you can play with, you can find documentation here. The first problem you’re likely to run into is to keep the AI on-topic. Of course a system or so-called “meta prompt” is the baseline action to take. But even though the latest models like GPT-4 do much better in sticking to a meta prompt’s instructions, in most cases it isn’t too terribly difficult for a user to subvert it. So what we can do is add some logic to check a user’s question before sending it to the LLM. One easy way to do this is with a separate LLM call where you just ask if the provided user question is relevant for a given subject or task. You can combine this with more traditional checks for keywords, but remember LLMs understand spelling mistakes and different languages, so keyword filters are mostly useless. Even with an LLM check for relevancy though, those prompts can likely still be circumvented (although much harder for regular users). So, you want to perform another check on the LLM’s response. Is the answer to the query actually relevant and in the language and style we expect? Another check we want to perform here is a similarity check to verify that the LLM wasn’t convinced to spit out your system’s prompt (“prompt leaks”). Once a malicious user gets to see your prompt, it will be a lot easier for them to get around it.

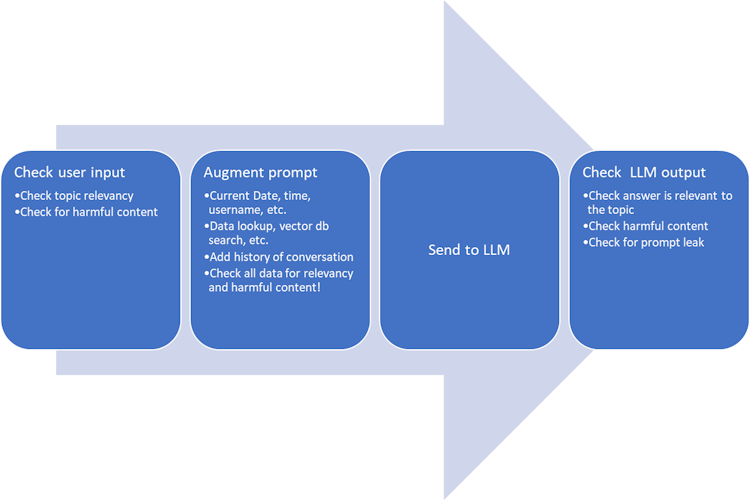

So at this point, our pipeline has already added some new steps:

From a feature perspective, your AI system likely is not “just” going to send the user input into an LLM. Besides your system prompt or meta-prompt, you will at least want to add some basic data to the user prompt. Perhaps the user’s name, the current date and time, etc. The LLM will need all this context because, as we discussed before, it’s a static model that does not change and knows nothing that it wasn’t pre-trained on or wasn’t provided in the prompt. And in many cases, there may be a whole lot of extra context that gets passed into the LLM. Maybe you have a backend database or even a vector database that you want to retrieve related data from. This is called RAG (Retrieval-augmented generation), and is very common. And in case of a chatbot, you need to track history of the conversation and add that history back into the prompt.

Now, the prompt augmentation could have introduced new harmful questions, irrelevant data, or other prompt injection. For example, if you’re looking up records in a database - what if someone added data that instructs the LLM to do something different, prompt leak, etc.? Imagine a user placing an online order but in the delivery instructions they place some overriding prompt for an LLM. They then use the site’s chatbot to ask if it got the delivery instructions. This is called indirect prompt injection. Essentially any text you’re adding to the prompt, regardless of where it comes from, is potentially suspicious and should be checked before sending to the LLM. Whether you combine it with the user input check, do a new check… those are all considerations based on your architecture, and how well your checks perform against larger prompts (versus sections of prompts).

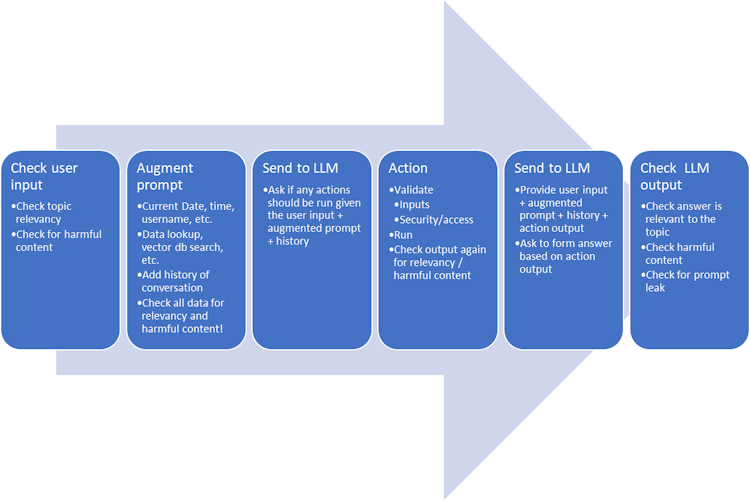

Now, perhaps you want some sort of plugin-style system, a way for the LLM to take action. In general you’d use an existing framework like Semantical Kernel or LangChain for this and probably not build it yourself. But, let’s look at this high-level for the sake of this pipeline discussion and in the interest of understanding. You would prompt the LLM with all the user input and augmented data, as well as any information on actions it can take (descriptions of the actions and their inputs and outputs), and the prompt would ask if any action should be taken and if so to provide the details (typically it’s instructed to return something structured like JSON for example, which can then be interpreted by the software). Then, after our traditional software performed the action, we run another prompt to the LLM where we send it the output of the action as well as the original user input and any augmentation and ask it to form a response to the user.

Of course, these calls to the LLMs are getting longer and longer, even if you ignore the history part which obviously also keeps growing. Additionally, checking inputs/outputs for relevancy or harmful content consistently may be done with an LLM call as well. And a vector db search will require a call to an embedding model. Each of these calls adds latency as well as a not-insignificant cost for each LLM call.

There are a lot more additional checks that can be done, depending on the use case, the model you use etc. Perhaps you want to explicitly check for biased responses. Maybe you need to double check there’s no copyright issues with the output (especially with images that could be a liability), etc. You’ll notice these checks with ChatGPT or Bing Chat, where it refuses to include references to celebrities or politicians, etc. These responses are NOT part of the underlying GPT LLM models, but rather checks being done by the software you’re using (some may be done through meta-prompting but again, one cannot solely trust on that to work every time). And finally, you’ll notice systems like Bing Chat will track how many times your questions are being caught in a filter. Try too many times, and it ends the conversation. That of course is also part of the software pipeline, not the LLM.

With plugins (or in our diagram here “actions”), a whole new host of problems and potential security concerns can arise. If any prompt injection gets past your user input check, which is impossible to assuredly prevent, who knows what the LLM is instructed to send to your action. This gets even more interesting when your system can perform multiple actions! What if a user is able to, despite your input checks, get the LLM to suggest executing actions to retrieve documents through one action and email them out through another? It’s clear that the software here is in control and can (should) be programmed to check for these sort of possible exploits. But, this is an entirely new frontier of security and I expect we’ll see some interesting exploits in the future…

There is no comment section here, but I would love to hear your thoughts! Get in touch!

Blog Links

Blog Post Collections

- The LLM Blogs

- Dynamics 365 (AX7) Dev Resources

- Dynamics AX 2012 Dev Resources

- Dynamics AX 2012 ALM/TFS

Recent Posts

-

GPT4-o1 Test Results

Read more... -

Small Language Models

Read more... -

Orchestration and Function Calling

Read more... -

From Text Prediction to Action

Read more... -

The Killer App

Read more...

Menu

Menu