The Great Text Predictor

Filed under: #tech #ai

When texting became a more popular sport, we were all still using physical keys. In fact, the keys were for the numbers you would dial phone numbers with, and to type letters each number key was assigned about 3-4 letters. You’d press a key once, you’d get the first letter. If you’d press the key twice in quick succession you’d get the 2nd letter, three times for the third. As many of my age that used the ever popular Nokia 3210 (picture below) at the time can attest, there was a certain level of pride in how fast one could spell out words. Ah, those young ‘uns and their texting!

And then dictionaries were invented. The keyboard would be set to a certain language, and the phone could use the first few letters you already typed to find words in its dictionary. You just had to confirm the right word when it would appear and you’d save yourself potentially quite a few clicks on the keyboard. Of course, sometimes the wrong words can pop up and you need to type more letters. So… phones started tracking words you used the most so they could appear first from the dictionary. Progress in productivity!

Fast forward to today’s smartphones and their text predictors. They no longer just predict words based on letters and finding words you use the most. Today, they also predict words before you’ve typed a single letter. And once you’ve typed a word, the phone suggests the next word that may make sense based on your usage and the dictionary it has (also learning new words you use it didn’t know, which bilingual texters like myself can attest usually ends up with a language-blended custom library that needs resetting every once in a while to unlearn).

This is great. We can now play along with those social media trends where you’re supposed to type a few standards words like “Tomorrow I will…” and then you just select the next few suggested words from your phone - intimately revealing to your thousands of social media followers what words your phone has learned you would type next.

Imagine though if that piece of software wouldn’t just look at the previous word. What if it looked at the whole sentence you typed so far? Based on statistics of usage it would probably come up with even better suggestions, right? What if instead of just looking at words in the sentence, it could figure out the relationship between the words in your sentence? It would probably, given enough time and usage, naturally find a statistical relationship between the subject, verbs, etc. Now what if we scale that up further? What if it can do this with multiple sentences, and figure out relationships within but also across the sentences? After enough usage, it would probably statistically know the relationship between words specifically about the subjects you usually text about. Now what if - and hear me out - we could already pre-train this? What if we pre-trained it on basically a huge portion of the internet as a data set? And now finally, what if we don’t just do one or two sentences, but give it enough size and power to find statistical relationships between words across paragraphs and paragraphs worth of sentences?

Ladies and gentlemen you’ve just been bamboozled because I gave you a greatly oversimplified explanation of how LLM (large language model) AI works.

Some of you are probably thinking - wait are you one of those guys saying LLMs are just text predictors? Well yes, I am, and certainly the early GPT2 and GPT3 models were. But there’s a catch with GPT3.5/4 models! Remember I mentioned when scaling up to multiple sentences, it would statistically figure out relationships between words - related to the subjects you usually text about. Now imagine we scale this up to so much data and allow so many relationships… the statistics coming out of this will start forming clusters of subjects and relationships. The text prediction starts becoming VERY coherent given subjects it has seen a lot of data about. And even more interestingly it seems to start making correct predictions on things it hasn’t seen at all, appearing to have statistically captured a certain essence of a subject… things like basic math.

To be clear, this is greatly oversimplified from a science perspective. But, I think, it’s a good basis to start understanding how large language models or LLMs work. And a great basis for more articles to discuss and deep-dive into all sorts of interesting aspects of LLMs and get more technical as we go along.

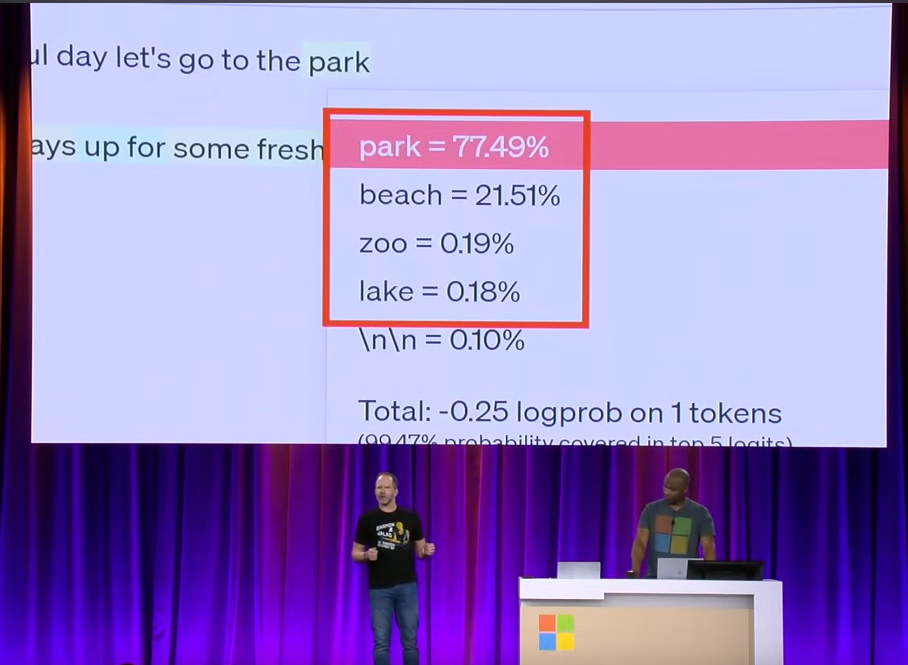

The great Scott Hanselman did some variations of this particular talk, but I’ll refer you to the Microsoft Ignite version where he immediately steps into SHOWING the audience the next word probabilities and what context means. You’re welcome to watch the whole thing, of course, but relevant to this blog I would love for you to watch just the first 10 (non-technical) minutes of this recording on YouTube: https://www.youtube.com/watch?v=5pbPLHYB6-0

There is no comment section here, but I would love to hear your thoughts! Get in touch!

Blog Links

Blog Post Collections

- The LLM Blogs

- Dynamics 365 (AX7) Dev Resources

- Dynamics AX 2012 Dev Resources

- Dynamics AX 2012 ALM/TFS

Recent Posts

-

GPT4-o1 Test Results

Read more... -

Small Language Models

Read more... -

Orchestration and Function Calling

Read more... -

From Text Prediction to Action

Read more... -

The Killer App

Read more...

Menu

Menu